Unsupervised Learning Algorithm - Hierarchical Clustering

Hierarchical clustering Concepts

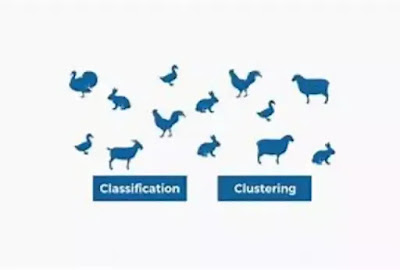

Hierarchical clustering is a popular unsupervised machine learning algorithm used to cluster or group similar data points together in a dataset. Hierarchical clustering does not need the user to predetermine the number of clusters, in contrast to K-Means clustering. The algorithm works by creating a hierarchy of clusters, where each data point initially forms its own cluster, and clusters are successively merged based on their similarity.

Here is an example of how Hierarchical clustering works: Suppose we have a dataset of customer transactions, where each transaction includes the customer's age, income, and spending behaviour. We want to group customers with similar spending behaviour together for targeted marketing campaigns. We use Hierarchical clustering to create a hierarchy of clusters based on the similarity of their spending behaviour. The algorithm initially assigns each customer to their own cluster and then successively merges clusters based on their similarity until a stopping criterion is met.

Hierarchical clustering Algorithm

- Define the problem and collect data.

- Choose a linkage method (e.g., complete linkage, single linkage, average linkage).

- Compute the distance matrix between all data points.

- Group the closest data points into a cluster.

- Compute the distance between clusters based on the chosen linkage method.

- Repeat the previous two steps until all data points belong to one cluster or until the desired number of clusters is reached.

- Evaluate the model on a test dataset to estimate its performance.

Here is a sample Python code for the Hierarchical clustering algorithm using the Scipy library:

python code

from scipy. cluster. hierarchy import dendrogram, linkage

from sklearn. datasets import make_blobs

import matplotlib. pyplot as plt

# Generate sample data

X, y = make_blobs(n_samples=100, centers=3, random_state=42)

# Create a linkage matrix

link_matrix = linkage(X, method='ward')

# Plot dendrogram

dendrogram(link_matrix)

plt.show()

Benefits of Hierarchical Clustering Algorithm:

- Can create a hierarchy of clusters for a better understanding of the data.

- It can help in identifying patterns and relationships within the data.

- does not require the prior specification of the number of clusters

- Can be used for anomaly detection and identification.

Advantages of Hierarchical Clustering Algorithm:

- The algorithm can handle noisy data and outliers.

- The algorithm can handle datasets of any shape or size.

- The algorithm can provide insights into the data structure and patterns.

- The algorithm can be used for data compression and speeding up computation.

Disadvantages of Hierarchical Clustering Algorithm:

- For large datasets, the approach may be computationally expensive.

- The algorithm may not work well for high-dimensional data.

- The interpretation of the dendrogram may be subjective.

- The algorithm may not be suitable for datasets with categorical variables.

Main Contents (TOPICS of Machine Learning Algorithms)

CONTINUE TO (Apriori algorithm)

Comments

Post a Comment